Exploring the Dark Side of Package Files and Storage Account Abuse

Hello All,

In this blog, we dive into the dark side of package files and Storage Account abuse within the Azure Function App service. We explore how package files can be leveraged to enhance the functionality of the Functions Apps, shedding light on the potential abuse of package files. By examining the connection between the Function App and Storage Account, we uncover how attackers can abuse the Storage Account's connection string to gain unauthorized access to the Function Apps. We will provide step-by-step insights into replacing binary files and deploying custom code, enabling attackers to take control of the Function App.

The research was jointly done by Raunak and myself.

What are Function Apps?

Function Apps (Lambda in AWS) are serverless computing services provided by Azure Cloud. They allow developers to build and deploy small, functions that can be triggered by events such as simple HTTP request. Function Apps provide an environment for executing our code without the need for managing the underlying infrastructure like operating system, storage, load balancers, RAM, networking, etc. They are designed to simplify the development and deployment of individual functions, abstracting away the complexities of infrastructure management, and enabling developers to focus solely on writing code.

Function App:

The first step in working with Function Apps is to create and configure the Function App. This involves specifying the programming language, runtime, and any additional settings required for the code. The Function App also provides an option of a container for hosting and managing our code.

Write Function Code:

Once the Function App is set up, developers can start writing the code for their functions. Each function should be designed to perform a specific task or handle a particular event. The code can be written in various programming languages supported by the Function App, such as C#, JavaScript, Python, or PowerShell. The code is only executed when a specific event is triggered.

Triggers:

Triggers determine when the function should be executed. Function Apps support a wide range of event-based triggers such as HTTP requests, timers, message queues, or database updates. Developers can choose the appropriate trigger(s) based on the requirements of their functions.

Bindings:

Bindings define the input and output data for the function. They provide a seamless way to integrate with other services, such as databases, storage accounts, or third-party APIs. By configuring bindings, developers can easily access and manipulate data from various sources within their functions.Deploy:

Once the function code, triggers, and bindings are set up, the Function App can be deployed.

The connection between Storage Accounts and

Function Apps

Storage accounts play a big role in the Function App as they are directly connected. In simple words, all the files we create in the Function App are stored in the Storage Accounts. Storage Accounts have 4 different types of storage File Share, Container, Tables, and Queues. Function Apps leverages File share to store their files that acts like an external disk.

So now, the question is how the Function App interacts with the Storage Account; that’s where all magic happens. Function App leverages the Connection string of the Storage Account which is stored in its environment variable ideally via Application Settings. So, if an attacker gets code execution in the Function App, he can simply run the env or set command depending on the operating systems used to list all the environment variables, that reveals the Storage Accounts connection string.

When we create functions in Python, NodeJS or PowerShell we can clearly see all files in the Storage Account and read the source code for the function as shown below.

Function App running from Package File

While creating functions we have multiple options that can be leveraged to deploy our Function Apps. One simple way is using the Azure portal itself and another is using VS Code or any other IDE that supports deployment of Function Apps. In this blog, we will focus on dotnet based Function Apps.

Let us look at the code when we create a simple HTTP Trigger in the Function App using the Azure portal.

Default dotnet code for HttpTrigger.

We can see the file name “run.csx” that was created by default. If we want to test the code, we can simply click on the “Get function URL” and open it in the browser.

Let us look at the configurations of the Function App when we create a new function from the portal.

However, developers do not use the Azure portal to deploy the code. They prefer to use IDEs to deploy the code. So, let us use VS Code to create a new Function App.

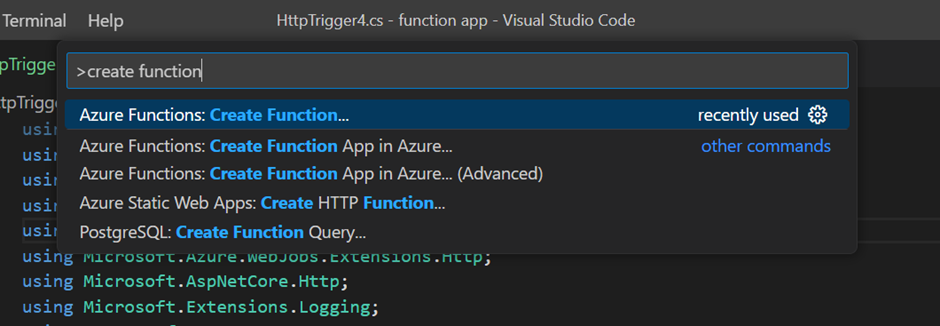

Press Ctrl + Shift + P and search for “create function”.

Provide the details and our Function App will be created locally, make sure to select dotnet as the programming language. To deploy the Function App code, we need to authenticate to the Azure account in VS Code.

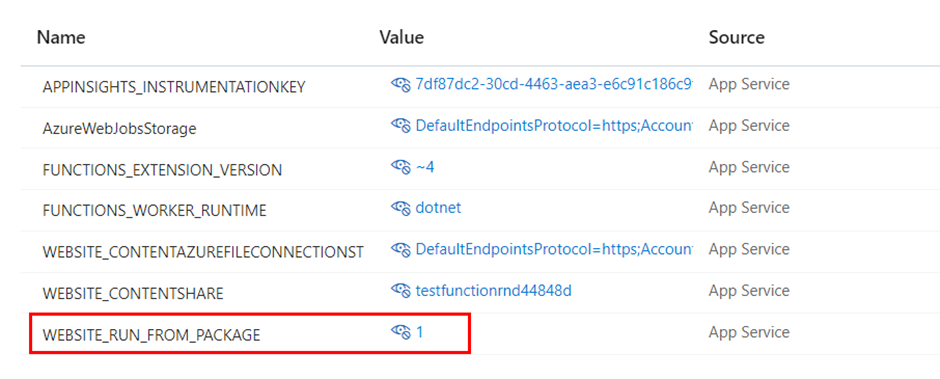

We will find that the configuration setting for our Function App has been modified once the deployment is completed.

We can see that the “WEBSITE_RUN_FROM_PACKAGE” value is changed from 0 to 1. The value 1 indicates “True” which means our Function App is leveraging the package file which contains our compiled code.

Let us look at our Function App functions in the portal.

We can clearly see in this JSON file a “scriptFile” parameter that has the value set “../bin/function app.dll”. This means that .cs file has been compiled to a library file and whenever a trigger is executed the .dll file will be executed.

The challenge we had faced initially!

In a standard deployment where we use non-compiled language like Python, NodeJS, etc, we can easily read and modify these functions from a Storage Account. But in our case, it is different. Since the Function App is now running from the compiled language, we won’t be able to read the source code. However, remember that warning message with zip files! Let’s find out where that zip files are located in the Storage Account.

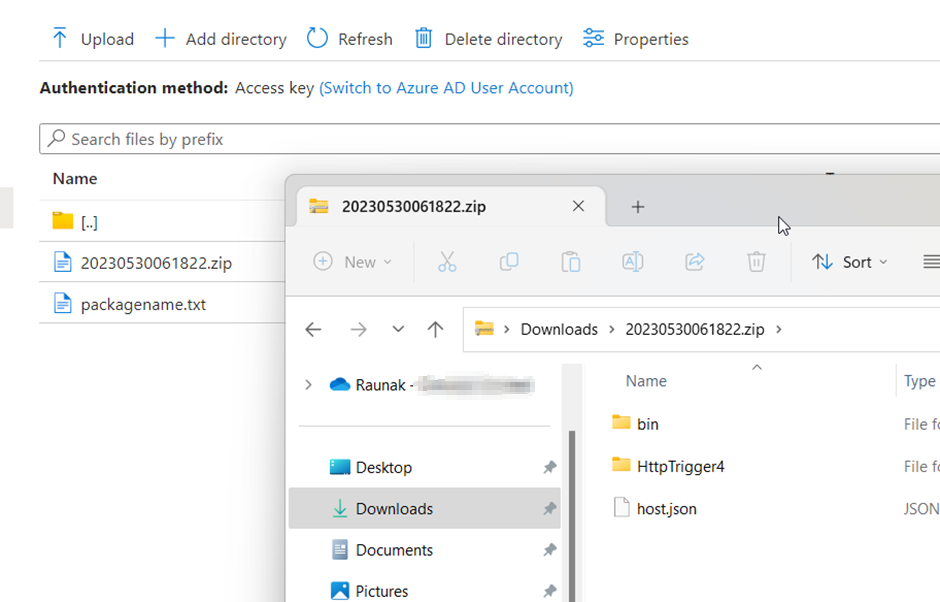

The zip files are located in data/SitePackages. In this folder we will also have all the old deployments zip files.

Since the code was created in C#, we knew that we might be able to read it via dnSpy tool. So, by using the dnSpy we read the code from the “function app.dll” file where we could see our httptrigger4 function.

Now let’s think from an attacker’s point of view. We have read/write permission over the Storage Account’s file share, and we manage to get the zip file that contains the source code of the Function App. The Function App code may contain sensitive information such as credentials for other services. We can introduce our own code that can perform malicious actions such as allowing us to execute system commands or trying to get a reverse shell (not recommended).

Exercise for the reader: Modify the code to add a backdoor to the existing function in the Function App.

Backdoor functions

An additional approach to backdoor the Function App is to introduce a new function. We can simply create an additional folder with our new trigger name add the "function.json" binding file and point the "scriptFile" parameter to the new .dll file that contains our code.

In VS Code we can create a new Function App and add a new Http Trigger function to the Function App and compile the same. It will generate the .dll file and the .pdb file.

We will also need to create an empty folder in the root directory of our zip file with the name of our trigger and place the "function.json" file that will contain the binding configuration.

Note: "scriptFile" & "entryPoint" parameter must be modified based on the code and the file name.

Once the code is synced, we will be able to see our new function added to the Function App on the portal.

Let’s rebuild this application and copy the .dll and .pdb files in the bin folder of the zip file and upload the zip file in the file share again. After the Function App is sync with the updated file, we can visit the URL again.

And here we go. Now we are absolutely sure that we have control of this function in the Function App and can perform all the stuff from the function like executing system commands.

If the Function App had managed identity enabled and if it had some privileges assigned on any other Azure resources, we could have tried to enumerate more and move laterally in the Azure environment.

We can also extract the Master Key from the Storage Account and use the same to add new functions. More details were published by NETSPI team on decrypting the Master Key.

Additionally we can also modify the "encrypted" parameter value to "false" and use the Master Key value directly to perform our actions.

Feel free to provide me the feedback on twitter @chiragsavla94

Thanks for reading the post.

Special thanks to all my friends who help / supported / motivated me for writing blogs. 🙏

👉👉👉 aewasian - Suck A Dildo Vid

ReplyDelete👉👉👉 aewasian onlyfans - Riding With Sex Toys Vid

👉👉👉 Mayseeds Onlyfans - Fast Blowjob Vid